Kubernetes 101: Storage

Facilitating data persistence and sharing, the Volume component is central to Kubernetes' functionality.

Introduction

Kubernetes, the industry-leading container orchestration platform, boasts a range of innovative components and concepts, one of the most crucial being the Kubernetes Volume. Facilitating data persistence and sharing, the Volume component is central to Kubernetes' functionality. In this comprehensive guide, we aim to unpack the intricate technical aspects of Kubernetes Volume, offering a detailed exploration supplemented with clear, relatable examples.

Understanding Kubernetes Volume

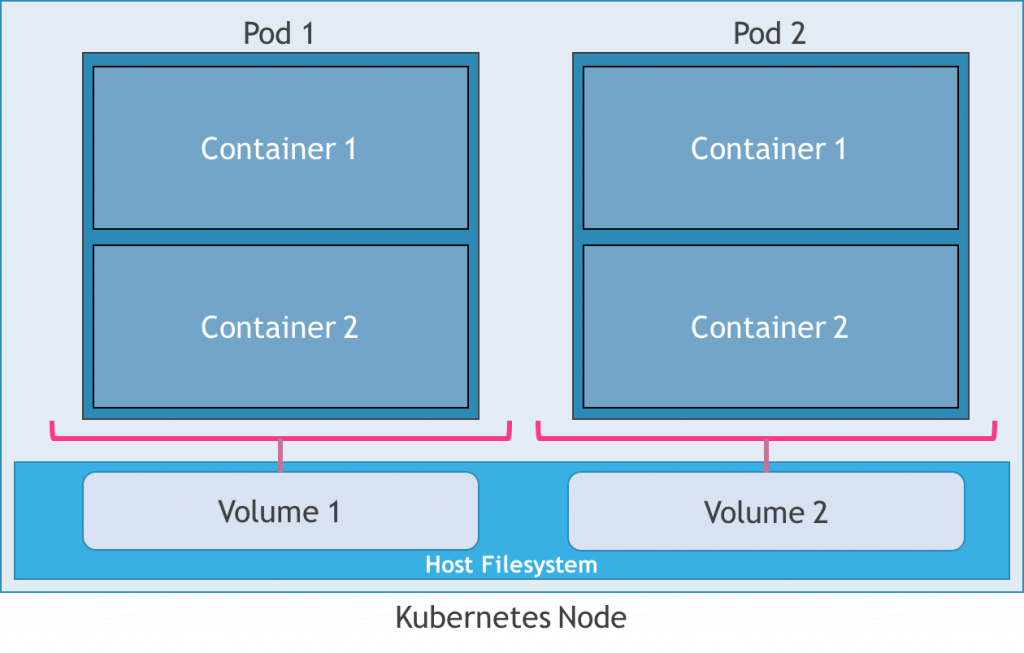

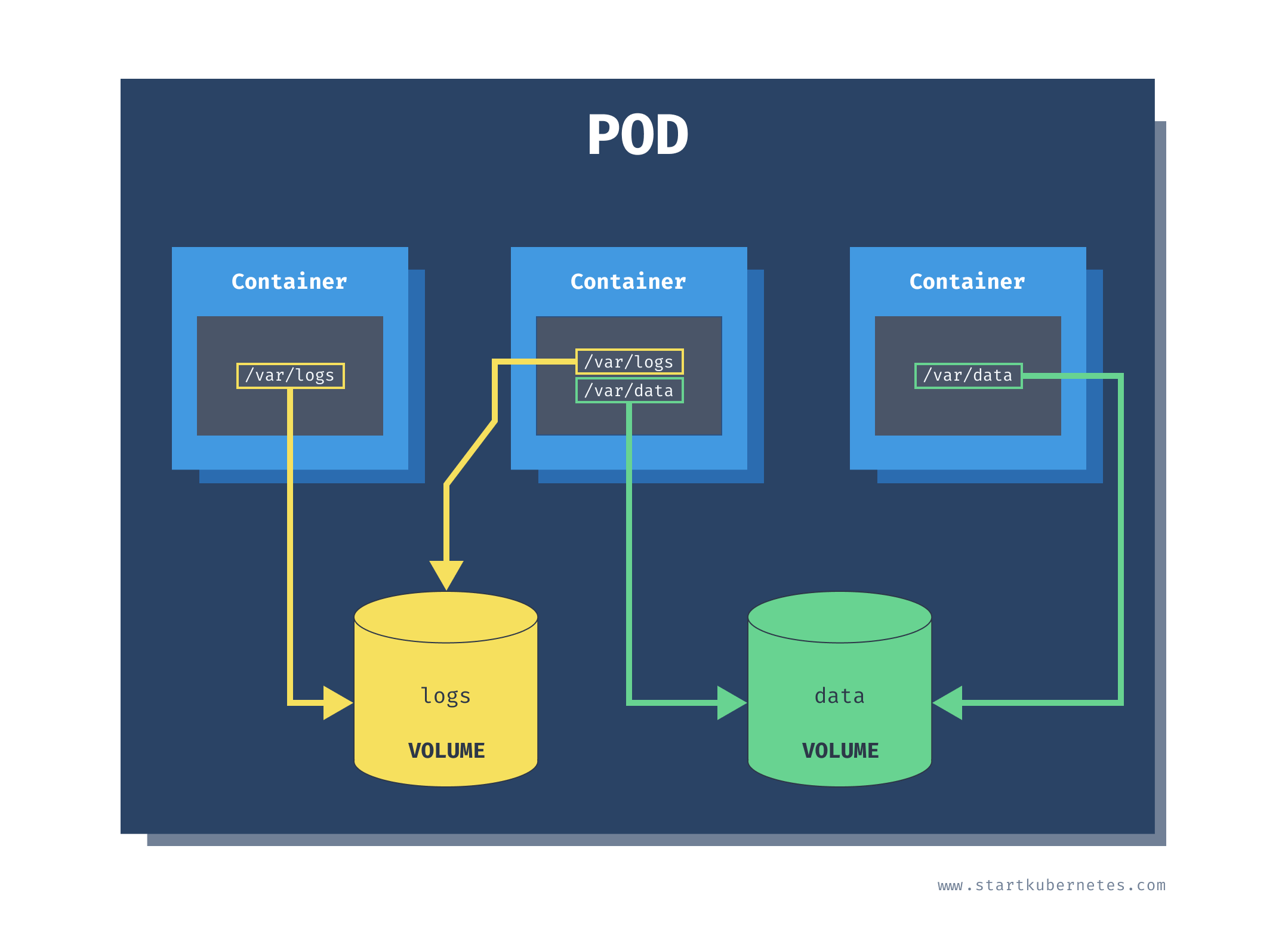

At its core, a Kubernetes Volume is a storage unit associated with a Pod, which allows data persistence and facilitates data sharing across multiple containers in the Pod. This concept, while simple, extends beyond the traditional perception of a directory containing data. A Kubernetes Volume lives as long as the Pod it's associated with, enhancing the capability of containers to share and handle data.

Kubernetes supports an array of Volume types, each catering to specific needs and environments. To name a few, the EmptyDir Volume, created when a Pod is assigned to a Node, is ideal for temporary or scratch space. On the other hand, the HostPath Volume exposes a file or directory from the Node's filesystem to the Pod, useful for running storage services on single Node tests. The PersistentVolume (PV) type provides a more robust solution for long-term data storage, persisting beyond the lifespan of individual Pods.

For instance, consider a Pod hosting a web application with two containers: one serving the web interface and another managing data. An EmptyDir Volume could be employed here for transient data, such as session or cache data, which need not persist beyond the Pod's life. However, for storing user data, a PersistentVolume would be apt, ensuring data survival beyond the lifecycle of the Pod.

How Kubernetes Volume Works

A Kubernetes Volume's lifecycle is intimately linked to its associated Pod. The moment a Pod is created, the Volume is instantiated, populated with data from the designated source. Conversely, the Volume ceases to exist upon the deletion of the Pod, with one exception – the PersistentVolume.

The relationship between a Kubernetes Volume and a Pod is fundamental. During the deployment of a Pod, the Kubernetes Volume is mounted onto a specified path in the container. This operation allows the application housed in the container to access the stored data seamlessly. For instance, a database application running in a container may require access to a PersistentVolume that stores the actual database files. When the Pod is deployed, this PersistentVolume is mounted to a specific path that the database application expects, allowing it to interact with the data as though it were on a local filesystem.

Volumes Type

In Kubernetes, a Volume is a directory, possibly with some data in it, which is accessible to the Containers in a Pod. Kubernetes supports many types of Volumes. A Pod can use any number of Volume types simultaneously. Let's look at some common Kubernetes volume types:

emptyDir: A temporary directory that shares a Pod's lifetime.hostPath: Mounts a file or directory from the host node's filesystem into your Pod.awsElasticBlockStore: Mounts an Amazon Web Services (AWS) EBS volume into your Pod.gcePersistentDisk: Mounts a Google Compute Engine (GCE) Persistent Disk into your Pod.azureDisk/azureFile: Mounts an Azure Disk volume or Azure File share into your Pod.nfs: Mounts an NFS share into your Pod.persistentVolumeClaim: Provides a way for Pods to use Persistent Volumes.configMap,secret,downwardAPI: Special types of volumes that provide a Pod with specific pieces of information.csi: An experimental type of volume that allows users to use third-party storage providers.

| Volume Type | Description | Pod-Level | Persistent |

|---|---|---|---|

| emptyDir | Temporary directory on node | Yes | No |

| hostPath | Directory on node | Yes | Yes |

| awsElasticBlockStore / gcePersistentDisk / azureDisk / azureFile | Cloud provider-specific storage | No | Yes |

| nfs | Network File System share | No | Yes |

| persistentVolumeClaim | Use a Persistent Volume | No | Yes |

| configMap / secret / downwardAPI | Expose pod info and cluster info | Yes | No |

| csi | Use third-party storage providers | No | Depends on the specific CSI driver |

Kubernetes Storage Providers

Kubernetes Storage Providers underpin the Volume abstraction, serving as the physical backends where data is stored. These providers span an array of options, from local, on-premises storage systems to cloud-based solutions, each with their unique offerings.

For instance, NFS (Network File System) can be used for on-premises deployments, allowing Pods to read from or write to data stored on your existing network storage. For cloud-based solutions, providers such as Amazon Web Services (awsElasticBlockStore) and Google Cloud (gcePersistentDisk) offer their own unique storage interfaces.

In essence, the Storage Provider you choose depends on your particular infrastructure and requirements. A business running its applications on AWS would benefit from using awsElasticBlockStore, while an organization with substantial on-premises storage hardware might opt for NFS.

| Storage Provider | Type | Dynamic Provisioning | Access Modes | Snapshot Support | Volume Expansion |

|---|---|---|---|---|---|

| AWS Elastic Block Store (EBS) | Block | Yes | ReadWriteOnce | Yes | Yes |

| Google Persistent Disk | Block | Yes | ReadWriteOnce, ReadOnlyMany | Yes | Yes |

| Azure Disk | Block | Yes | ReadWriteOnce | Yes | Yes |

| Azure File | File | Yes | ReadWriteOnce, ReadOnlyMany, ReadWriteMany | Yes | Yes |

| NFS | File | No* | ReadWriteOnce, ReadOnlyMany, ReadWriteMany | No* | No* |

| Local | Block, File | No* | ReadWriteOnce | No | Yes |

Remember, each provider comes with its advantages, limitations, and performance characteristics. Therefore, your selection should be based on a careful evaluation of your application's needs, such as I/O performance, data resilience, and cost-effectiveness.

The Container Storage Interface (CSI)

The Container Storage Interface (CSI) emerged as a standard for exposing arbitrary block and file storage systems to containerized workloads. Kubernetes adopted CSI to allow third-party storage providers to develop solutions without needing to add to the core Kubernetes codebase.

CSI enables Kubernetes to communicate with a wide range of storage backends, making it possible to use both traditional storage systems and newer software-defined storage solutions. This feature ensures that no matter what storage system you're using—be it on-premises hardware like NFS or a cloud-based system like Amazon S3—your applications running on Kubernetes can access the storage they need.

Consider an example where your organization wants to use a new, cutting-edge storage solution that isn't natively supported by Kubernetes. With CSI, the storage provider can create a plugin that allows Kubernetes to communicate with the storage solution, providing seamless integration with your Kubernetes workloads.

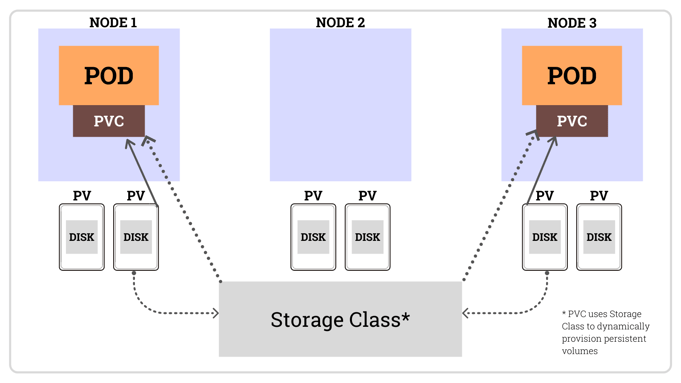

Storage Classes in Kubernetes

StorageClasses in Kubernetes play a crucial role in dynamic volume provisioning. They enable you to define different 'classes' or tiers of storage, and volumes are provisioned according to these classes based on user demand. Let's delve into the detailed implementation of StorageClasses.

StorageClasses in Kubernetes provide a way for administrators to describe the "classes" of storage they offer. When creating a PersistentVolumeClaim, users can request a particular class to get matching PersistentVolumes.

There are four types of StorageClasses:

- Default: This class is used when no storageClassName is specified in the PersistentVolumeClaim. It is useful for use cases when you don't need any specific storage class.

- Manual: In this case, you manually create the PersistentVolume that points to the physical storage asset. This method gives you more control over the underlying storage features but is not dynamic.

- Static Provisioning: This is a hybrid method where you create PersistentVolumes manually but allow the system to manage PersistentVolumeClaims dynamically.

- Dynamic Provisioning: Dynamic provisioning eliminates the need for cluster administrators to pre-provision storage. Instead, it automatically provisions storage when it is requested by users.

| Storage Class | Description | Persistent Volume Creation |

|---|---|---|

| Default | Used when no storageClassName is specified. | Automatic (uses the default storage class) |

| Manual | You manually create the PersistentVolume. | Manual |

| Static Provisioning | Create PersistentVolumes manually but manage PersistentVolumeClaims dynamically. | Manual PV, Dynamic PVC |

| Dynamic Provisioning | Automatically provision storage when it is requested by users. | Automatic |

Understanding StorageClasses

A StorageClass is a Kubernetes object that defines the nature of storage provisioned dynamically for PersistentVolumes that consume it. It encapsulates details like the storage provisioner, parameters specific to the provisioner, and the reclaim policy for dynamically provisioned PersistentVolumes.

StorageClasses are incredibly flexible, allowing administrators to define as many classes as needed. This flexibility enables the creation of various storage tiers, such as 'fast' for SSD-based storage, 'slow' for cheaper, HDD-based storage, or tiers based on IOPS requirements or backup policies.

Implementing StorageClasses

To create a StorageClass, you'd first need to define it in a YAML manifest, specifying the details like provisioner, parameters, and reclaimPolicy.

Let's consider a simple example where we create a StorageClass for Google Cloud's standard persistent disk storage:

In this example, we define a StorageClass with the name standard. The provisioner is set to kubernetes.io/gce-pd which indicates that the volumes are provisioned using Google Cloud's persistent disk storage. The parameters field specifies that we use 'pd-standard' type disks, and the reclaimPolicy is set to 'Delete', which means the volume is automatically deleted when a user finishes using it.

The volumeBindingMode parameter set to WaitForFirstConsumer means that a volume will only be provisioned when a pod using the PVC is scheduled to a node. This mode is essential for volume types that are not universally available or may have restrictions on which nodes they can be attached to.

Using a StorageClass

Once a StorageClass is created, it can be used in a PersistentVolumeClaim by specifying the StorageClass's name in the storageClassName field.

In this example, we create a PersistentVolumeClaim that uses our previously defined standard StorageClass. This claim requests an 8Gi volume that can be mounted with read-write privileges by a single node.

Automating Provisioning with Default StorageClasses

It's worth noting that Kubernetes also allows you to set a default StorageClass. Any PersistentVolumeClaim that doesn't specify a storageClassName will automatically use the default StorageClass.

To set a StorageClass as default, you'd add the storageclass.kubernetes.io/is-default-class annotation to the StorageClass object, like so:

Here, the 'standard' StorageClass is set as the default, making it automatically selected for PVCs that don't explicitly declare a storageClassName.

With StorageClasses in place, Kubernetes can automatically manage the provisioning and lifecycle of PersistentVolumes based on predefined classes, enhancing storage flexibility and efficiency.

Example of Storage Class Implementation

Let's break down this manifest:

apiVersion: storage.k8s.io/v1: This specifies the API version to use.kind: StorageClass: This defines the kind of Kubernetes object to create, in this case, a StorageClass.metadata.name: my-storage-class: The name of the StorageClass that we're creating.provisioner: kubernetes.io/aws-ebs: The provisioner is responsible for creating and deleting storage resources. In this case, it's set to use the AWS EBS provisioner, indicating that we are using Amazon Web Services' Elastic Block Store.parameters.type: gp2: This is an AWS-specific parameter, and it's specifying the type of EBS volume to provision. 'gp2' stands for General Purpose SSD, a type of EBS storage.parameters.fsType: ext4: This is another AWS-specific parameter, specifying the filesystem type of the provisioned EBS volumes. Here, it's set to use ext4.reclaimPolicy: Retain: The reclaim policy for the dynamically-provisioned PersistentVolumes. The 'Retain' policy means the PV will not be deleted when the corresponding PVC is deleted.volumeBindingMode: WaitForFirstConsumer: This indicates when to bind and provision a PersistentVolume. The 'WaitForFirstConsumer' mode means the PV will only be provisioned when a Pod using the PVC is scheduled. This mode is crucial for volume types that may have restrictions on which nodes they can be attached to.

By applying this manifest, Kubernetes creates a StorageClass named 'my-storage-class' with the specified configuration. Now, whenever a PersistentVolumeClaim specifies storageClassName: my-storage-class, a new PersistentVolume will be dynamically provisioned on AWS EBS with the specified parameters and attached to the claim.

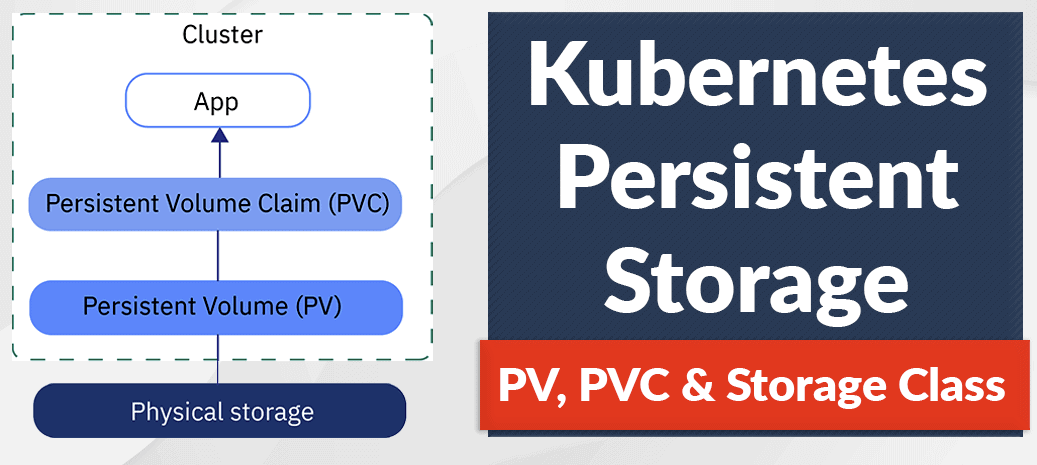

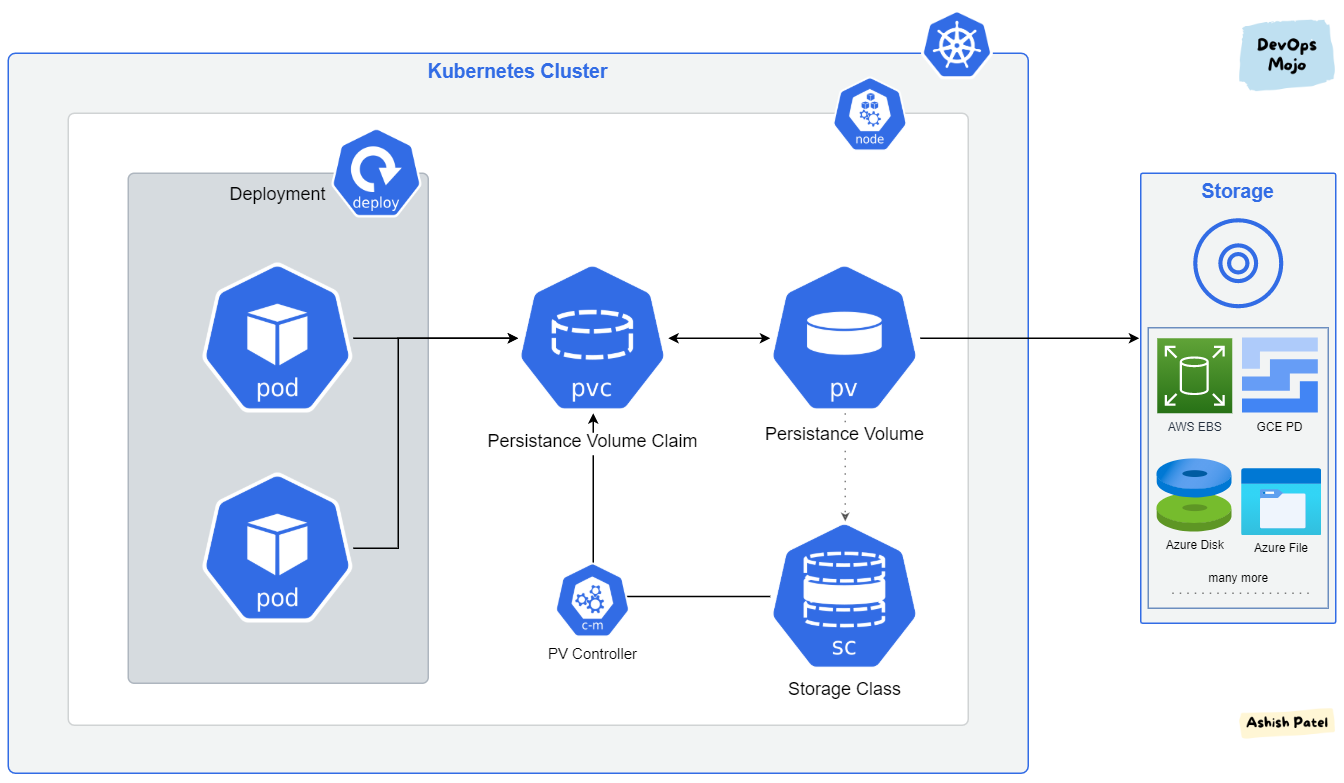

PV and PVC

In Kubernetes, PersistentVolume (PV) and PersistentVolumeClaim (PVC) are two core components of the storage system, designed to aid in storage orchestration. To understand them, we need to delve deeper into their definition, how they operate, their life cycle, and their mode of access.

Persistent Volumes (PV)

A PersistentVolume (PV) is a piece of storage provisioned by an administrator in the cluster. It is a cluster-wide resource, independent of any individual pod's life cycle. PVs can be provisioned either statically (pre-provisioned by an administrator) or dynamically (through StorageClasses).

PVs can be backed by various storage systems, including Network File System (NFS), iSCSI, cloud-based storage like AWS's Elastic Block Store (EBS), Google Cloud's Persistent Disk, Azure Disk, among others.

Persistent Volume Claims (PVC)

A PersistentVolumeClaim (PVC), on the other hand, is a request for storage by a user. Just as pods consume node resources such as CPU and memory, PVCs consume PV resources. PVCs allow users to consume abstract storage resources. Once a PVC is created, the Kubernetes control plane looks for a suitable PV to bind to the PVC. If a suitable PV is found, the lifecycle of the PVC becomes tied to the PV.

How PV and PVC Work Together

The relationship between a PV and a PVC is a binding one. The PVC lays out the specifications for storage, and if a PV meeting those specifications is available, the PVC binds to it. When the PVC is deleted, depending on the reclaim policy of the PV, the PV can either be retained, deleted, or recycled (if supported by the storage provider).

For example, a user could create a PVC asking for 10GB of storage. If a PV exists that provides that amount of storage, the PVC binds to it. If such a PV doesn't exist, the PVC will remain unbound until a suitable PV appears.

Lifecycle

PVs and PVCs have their lifecycles. A PV's lifecycle is independent of any Pod or PVC, while a PVC is born when it's defined and dies when it's deleted. A PV can have different reclaim policies: Retain (PV is not deleted after a PVC is deleted), Delete (PV is automatically deleted when PVC is deleted), or Recycle (deprecated in Kubernetes 1.13).

Access Modes

PVs and PVCs support different access modes:

ReadWriteOnce: The volume can be mounted as read-write by a single node.ReadOnlyMany: The volume can be mounted read-only by many nodes.ReadWriteMany: The volume can be mounted as read-write by many nodes.

It's important to note that not all storage providers support all access modes. For instance, a standard GCE Persistent Disk only supports ReadWriteOnce whereas NFS supports ReadWriteMany.

Utilization

To use a PV and PVC, you need to declare them in your pod configuration. You'd declare a PVC, specifying the storage requirements and access modes, and then use the PVC's name as a volume in your Pod's configuration. The Volume is then attached to the desired containers in your Pod, specifying a mount path. The application in the container will then interact with the volume as if it were a local filesystem, oblivious to the nature of the underlying storage mechanism.

Here is a basic manifest for a PersistentVolume (PV):

Now let's break it down:

apiVersion: v1: Specifies the API version to use.kind: PersistentVolume: Defines the kind of Kubernetes object to create, in this case, a PersistentVolume.metadata.name: my-pv: The name of the PV that we're creating.spec.capacity.storage: 10Gi: The storage capacity of the PV. This is the amount of storage that the volume provides.spec.volumeMode: Filesystem: Defines whether the storage used is block-based or filesystem-based. In this case, it is filesystem-based.spec.accessModes: [ReadWriteOnce]: The access modes for the PV.ReadWriteOncemeans the volume can be mounted as read-write by a single node.spec.persistentVolumeReclaimPolicy: Retain: The reclaim policy for the PV. When a PVC is deleted,Retainpolicy means the PV will not be deleted.spec.storageClassName: slow: The StorageClass associated with the PV. The StorageClass should be created before referencing here.spec.hostPath.path: /data/my-pv: The path on the host where the data will be stored.hostPathshould only be used for single-node testing, not for multi-node clusters.

And here is a manifest for a PersistentVolumeClaim (PVC):

Let's break this down:

apiVersion: v1: Specifies the API version to use.kind: PersistentVolumeClaim: Defines the kind of Kubernetes object to create, in this case, a PersistentVolumeClaim.metadata.name: my-pvc: The name of the PVC that we're creating.spec.storageClassName: slow: The StorageClass requested in the PVC. A PV of the same class will be bound to this PVC.spec.accessModes: [ReadWriteOnce]: The access modes for the PVC.ReadWriteOncemeans the volume can be mounted as read-write by a single node. The bound PV must also support this mode.spec.resources.requests.storage: 5Gi: The storage size being requested in the PVC. The bound PV must have at least this much storage available.

When these two manifests are applied, Kubernetes will bind my-pvc to my-pv as they have compatible accessModes and storageClassName, and the PV has enough storage for the PVC's request. The bound volume can then be used by a Pod by referencing my-pvc in its spec.

About 8grams

We are a small DevOps Consulting Firm that has a mission to empower businesses with modern DevOps practices and technologies, enabling them to achieve digital transformation, improve efficiency, and drive growth.

Ready to transform your IT Operations and Software Development processes? Let's join forces and create innovative solutions that drive your business forward.

Subscribe to our newsletter for cutting-edge DevOps practices, tips, and insights delivered straight to your inbox!