Kubernetes 101: Service

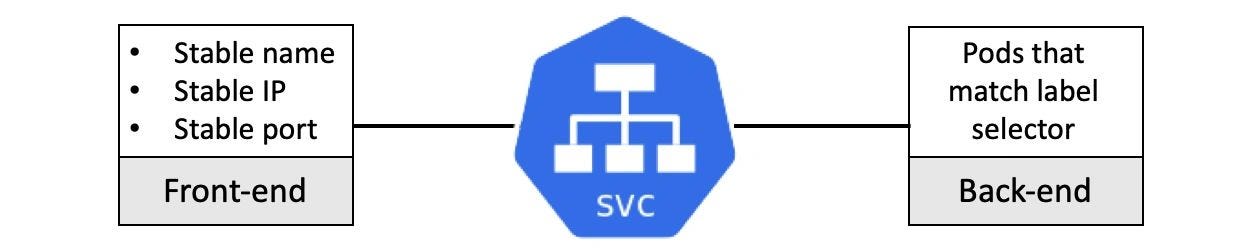

In Kubernetes, a Service is a pivotal abstraction that defines a logical set of Pods through policies describing how to access them.

Introduction

In Kubernetes, a Service is a pivotal abstraction that defines a logical set of Pods through policies describing how to access them. Unlike Pods, which can come and go, a Service exists with a stable IP and DNS name. Even when underlying Pods are replaced due to failures or upgrades, the Service keeps its characteristics intact, ensuring that other components don't lose connectivity.

Services are integral in Kubernetes because they bring predictability in an otherwise dynamic environment. They provide a unified means of accessing the diverse functionalities provided by individual Pods, irrespective of their life cycle. As Pods disappear and reappear, Services ensure a reliable way to reach the active Pods, creating a seamless user experience.

The Role of Services on the Kubernetes Cluster

Services in a Kubernetes cluster play an instrumental role. Their crucial responsibilities are:

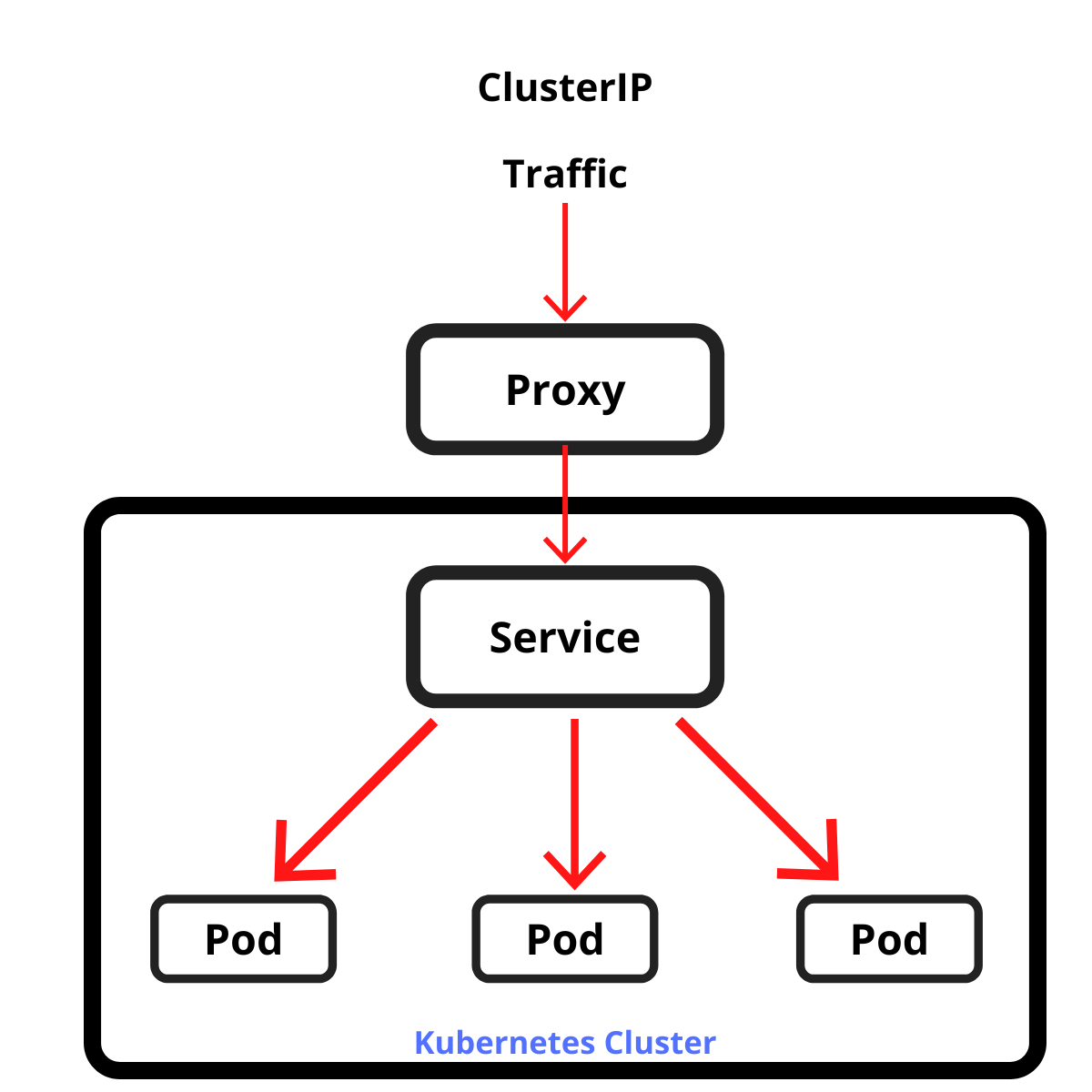

- Load balancing: Services distribute network traffic across multiple Pods to ensure no single Pod becomes a bottleneck. This load balancing aids in achieving high availability and fault tolerance in applications.

- Stable network configuration: Kubernetes Services provide stable networking by assigning a consistent IP address, decoupling the ephemeral lifecycle of Pods from the network. It means even when Pods die and are replaced, the Service's IP address remains unchanged, reducing the need for configuration updates.

- Intercommunication among pods: Services facilitate seamless communication among different Pods. They allow Pods, which could be part of various deployments but belong to the same application, to interact and function in a synchronized manner.

Service Discovery in Kubernetes

Service discovery is a crucial feature in distributed systems like Kubernetes. It allows applications and microservices running on a cluster to find and communicate with each other automatically. Kubernetes accomplishes service discovery primarily in two ways:

- Environment Variables: Kubernetes automatically creates environment variables for each active Service in a cluster. These variables, which include the Service name and port, provide Pod-to-Service communication. However, using environment variables for service discovery can become complicated in larger systems due to naming collisions and the need for Pods to restart to recognize new Services.

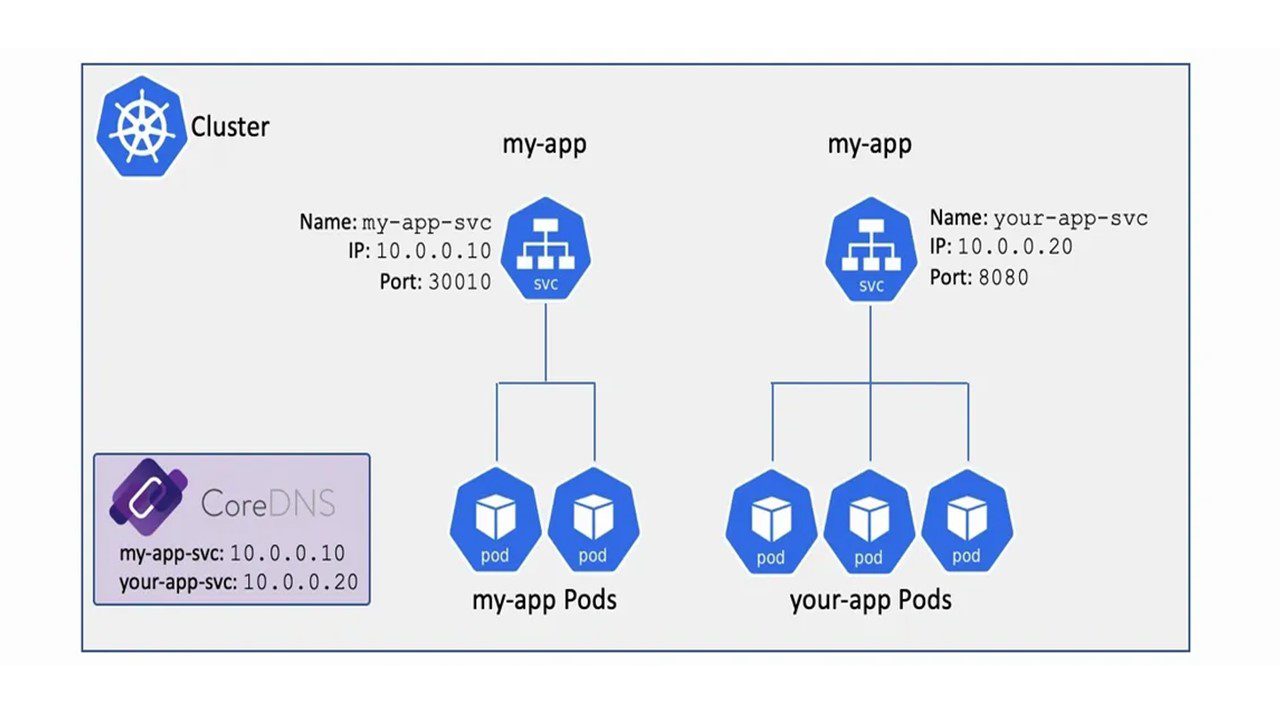

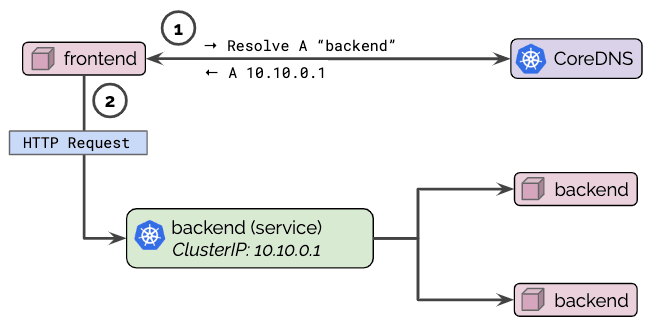

- DNS: Kubernetes offers a more elegant approach to service discovery using a DNS server, typically CoreDNS or Kube-DNS. When a Service is created, it is assigned a DNS name. Any Pod in the cluster can reach the Service using this name, simplifying inter-service communication significantly.

Kubernetes DNS

Kubernetes Domain Name System (DNS) is a built-in service launched at cluster startup, designed to provide name resolution for services within a Kubernetes cluster. Its purpose is to enable pods and services within the cluster to communicate with each other efficiently using easy-to-remember domain names, instead of cumbersome IP addresses.

The way Kubernetes DNS works is simple yet effective. When you create a Service, it is assigned a unique DNS name in the format service-name.namespace-name.svc.cluster.local, where:

service-nameis the name of your service.namespace-nameis the name of the namespace in which your service resides.svcis a static value that denotes this is a service.cluster.localis the default domain for the cluster (this can be changed if needed).

For example, if you have a Service named my-service in a namespace named my-namespace, the fully qualified domain name (FQDN) would be my-service.my-namespace.svc.cluster.local.

Pods within the same namespace can access the service using the Service's name. If the Pods are in a different namespace, they must use the fully qualified domain name. The DNS server responds with the Service's ClusterIP, enabling the requesting Pod to access the Service.

One of the significant benefits of Kubernetes DNS is that it allows applications to self-discover services, meaning that applications do not need to hardcode the IP addresses of their dependencies. This functionality is crucial in a dynamic environment like Kubernetes, where Pods (and consequently IP addresses) can frequently come and go.

By abstracting away the network details and enabling services to be located by their names, Kubernetes DNS enhances the loose coupling of microservices, making the system more robust, scalable, and easier to manage.

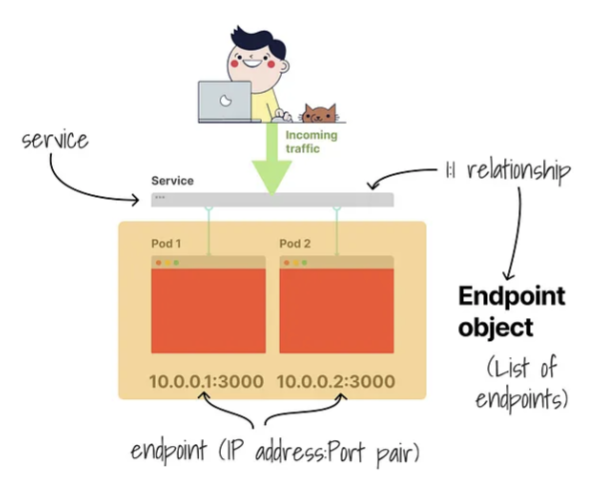

Understanding Endpoint Objects in Kubernetes

In Kubernetes, an Endpoint object is a collection of IP addresses and ports that belong to a Service. When a Service is defined to select Pods, an associated Endpoints object is automatically created, storing the IP addresses and ports of the Pods that the Service will forward traffic to.

Endpoints objects play a crucial role in maintaining the communication between Services and Pods. If a Pod dies and a new one replaces it, the new Pod's IP will be updated in the Endpoints object, ensuring continuous service availability.

An Endpoint object is named identically to the associated Service and keeps track of IP addresses and ports for incoming connections. For example, consider a Service named my-service that selects all Pods with the label app=MyApp. If these Pods have the IPs 192.0.2.1 and 192.0.2.2, the Endpoints object my-service would include these IP addresses.

Service and Deployment on Kubernetes

Pods represent the smallest deployable units in a Kubernetes cluster and can be created and destroyed rapidly during the application's lifecycle. However, managing Pods individually in a large system can be cumbersome. This is where Deployments come in.

A Deployment in Kubernetes manages the desired state for Pods and ReplicaSets. It ensures that the specified number of Pods are running at any given time by creating or destroying Pods as necessary.

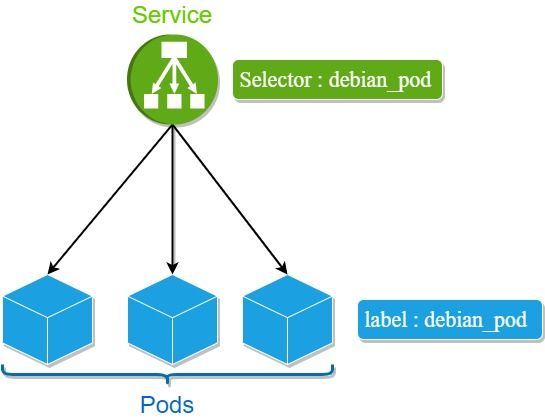

Services, Deployments, and Pods interact closely within a Kubernetes system. When a Deployment maintains the desired state of Pods, a Service provides a unified network interface to these Pods. The Service uses label selectors to select the Pods managed by the Deployment. This relationship facilitates exposure of Pods (which may otherwise have non-deterministic IPs due to their dynamic lifecycle) via a Service, providing network stability.

For instance, consider a Deployment managing a set of Pods. If these Pods are configured to serve HTTP requests, a Service can be set up using a selector that matches the labels of these Pods. The Service can then distribute incoming requests to these Pods, ensuring efficient load balancing.

How Pods Label Selector Works

In Kubernetes, labels are key-value pairs attached to objects, such as Pods, that are intended to convey meaningful and relevant information. They provide identifying metadata and serve as a means to categorize and organize resources based on shared characteristics. A label, for example, can indicate that certain Pods belong to a specific application layer, like frontend or backend.

Label selectors are the mechanism that Services (and other resources, like Deployments or ReplicaSets) use to determine the set of Pods to which an operation applies. The 'selector' field of the Service definition provides the conditions that must be met. Pods that satisfy these conditions are selected and become part of the Service.

Here's an example of a Service definition with a selector:

In this example, the Service my-service targets any Pod with the label app=MyApp. The selector app: MyApp corresponds to the key-value pair of the label attached to the Pods.

When you apply this Service, the kube-proxy component on each node in your Kubernetes cluster sets up routing rules. These rules direct traffic destined for the Service's IP and port to one of the Pods that the Service's label selector has chosen.

It's important to note that when the set of Pods selected by a Service changes (e.g., when Pods are created, destroyed, or their labels are updated), the Service is automatically updated, and traffic will be redirected to the new set of Pods.

Thus, label selectors enable Kubernetes Services to dynamically and flexibly associate with Pods, providing a reliable interface to groups of Pods and decoupling the ephemeral lifecycle of Pods from other parts of the system. This mechanism is at the heart of how Services enable network stability in the face of Pod churn, and allows Kubernetes to provide robust, distributed service networking.

Different Types of Services in Kubernetes

Kubernetes offers several types of Services to suit varying use cases:

- ClusterIP: The default type of Service in Kubernetes. It provides a Service inside the cluster that other apps can access. This Service gets a unique IP address that lasts the duration of the Service and can be reached by any other component within the cluster.

- NodePort: A NodePort Service is accessible from outside the cluster. It works by opening a static port for each Node in the cluster and forwards traffic from that port to the Service. While it provides external connectivity, it lacks flexibility as all traffic must go through the specified static port.

- LoadBalancer: A LoadBalancer Service provisions a load balancer from the underlying infrastructure and routes external traffic to the Service. It's an extension of the NodePort Service and offers more flexibility and control for incoming traffic.

- ExternalName: An ExternalName Service maps a Service to a DNS name. It’s a way to create a Service for an existing service that resides outside the cluster. It doesn't have any selectors or define any Endpoints. Instead, it forwards requests to the external address.

| Service Type | Definition | Exposed To | Use Case |

|---|---|---|---|

| ClusterIP | Default Service type that provides a Service inside the cluster that other apps can access. | Within the Cluster | When you want to expose your service within the Kubernetes cluster only. |

| NodePort | Exposes the Service on the same port of each selected Node in the Kubernetes cluster using NAT. | Outside the Cluster | When you need to allow external connections to your service and are okay with the constraint of a single port across all nodes. |

| LoadBalancer | Exposes the Service externally using the Load Balancer of a cloud provider. | Outside the Cluster | When you are running your cluster in a cloud environment and want to leverage the cloud provider's native load balancing. |

| ExternalName | Maps a Service to a specified DNS name. | Anywhere | When you need to access external systems directly, without going through a proxy or load balancer. |

Example of Service Manifest

Now, let's break down each section:

apiVersion: v1: This defines the version of the Kubernetes API you're using to create this object. For a Service, the API version is v1.kind: Service: The kind field defines the type of the Kubernetes object you want to create. In this case, it's a Service.metadata: This section includes data that helps uniquely identify the object. For this object, it only includes:

name: my-app-service: The name of the Service. This name should be unique within the context of the namespace.spec: This section describes the desired state of the object. Here, the spec field includes:

selector: This field defines how the Service identifies the Pods to which it will direct traffic. In this example, the Service selects Pods with the labelapp=MyApp

ports: This is a list of ports the Service exposes. In this case, there is only one port.

protocol: TCP: The network protocol this port listens to. Other options could be UDP or SCTP.

port: 80: The port number the Service listens to. Traffic sent to this port will be forwarded to the Pods.

targetPort: 9376: The port number on the Pod to which the Service will forward the traffic. The targetPort can be the same as the port, or it can be different.

type: LoadBalancer: This field indicates the type of Service. LoadBalancer means the Service will be exposed externally using the cloud provider's load balancer.

This example Service named my-app-service would listen to TCP traffic on port 80 and forward it to TCP port 9376 on any Pod with the label app=MyApp. If the cluster is being run in a cloud provider that supports it, the Service will be exposed externally via a cloud load balancer.

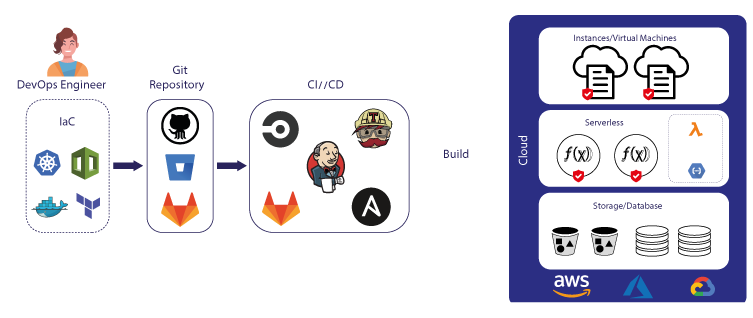

About 8grams

We are a small DevOps Consulting Firm that has a mission to empower businesses with modern DevOps practices and technologies, enabling them to achieve digital transformation, improve efficiency, and drive growth.

Ready to transform your IT Operations and Software Development processes? Let's join forces and create innovative solutions that drive your business forward.

Subscribe to our newsletter for cutting-edge DevOps practices, tips, and insights delivered straight to your inbox!