Kubernetes 101: Introduction

Kubernetes (often abbreviated to K8s) is a powerful, open-source system for managing containerized applications across multiple hosts.

Introduction

In the ever-evolving world of software development, containerization and cloud-native applications are becoming pivotal. Mastering these technologies and their orchestration, specifically through Kubernetes, is now indispensable for developers and organizations. This comprehensive piece delves into the intricate technicalities, offering a meticulous understanding of the topics.

Understanding Containers

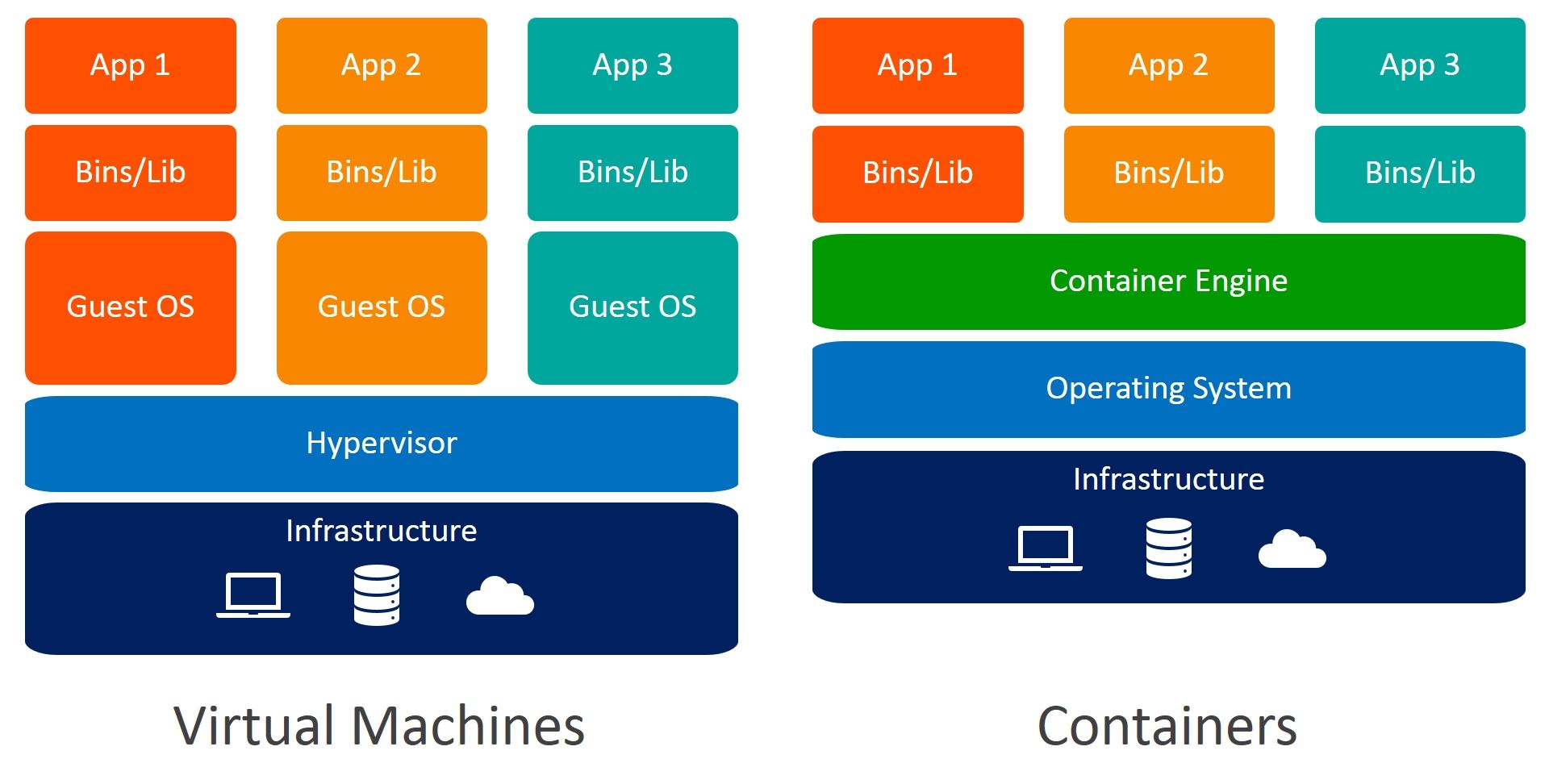

A container in software terms refers to a lightweight, standalone, and executable package that incorporates everything necessary to run a piece of software, including the code, a runtime, libraries, environment variables, and config files. It is an abstraction at the application layer, encapsulating the application's code, configurations, and dependencies into a single object. Containers leverage operating system-level virtualization where the kernel permits multiple isolated user-space instances, rather than emulating an entire operating system. As a result, containers are more portable and efficient than virtual machines, boasting rapid start-up times and reducing system overhead.

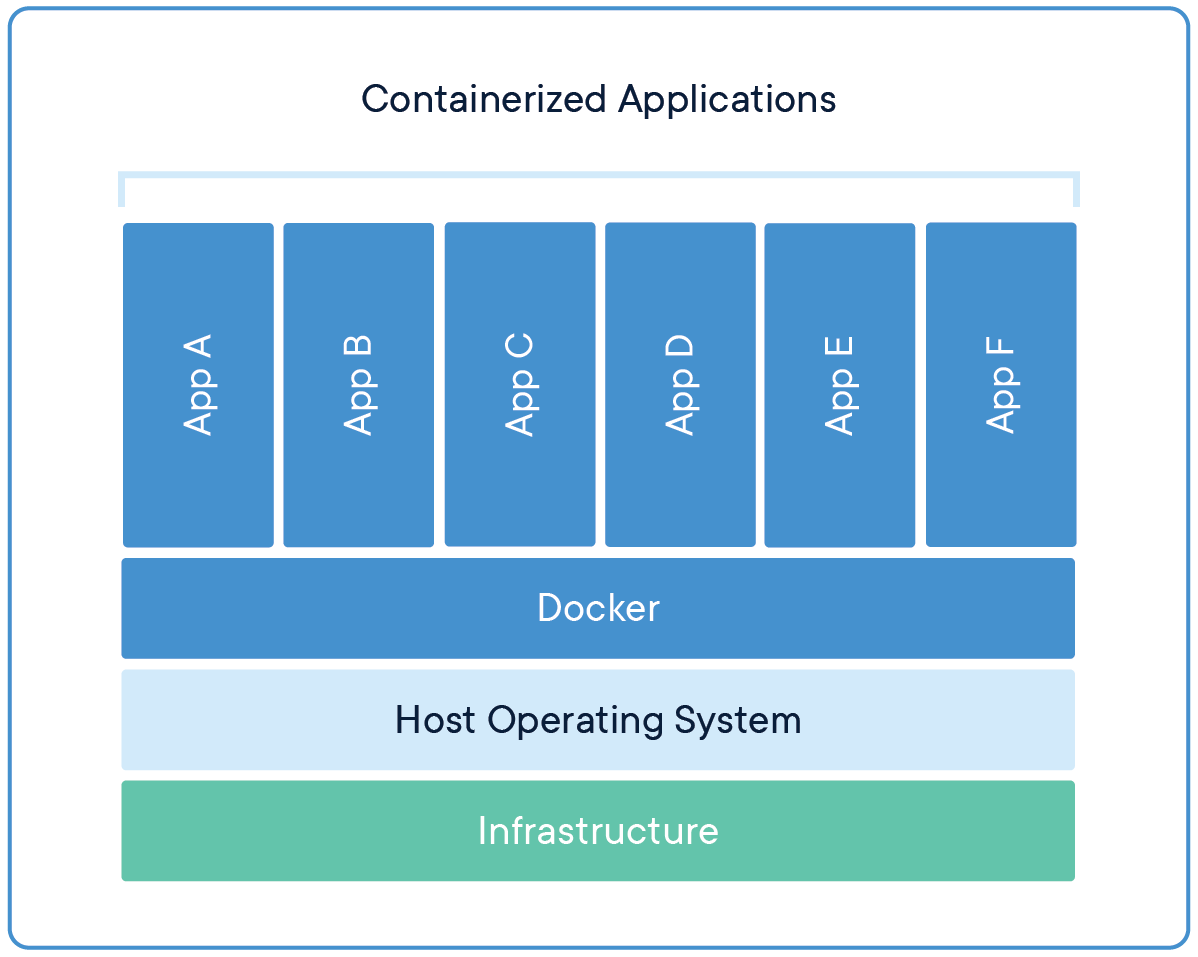

Containerized Applications

Containerized applications have revolutionized the development, packaging, and deployment of software. A containerized application packages the application code and all its dependencies into a standardized unit for software development. This approach is conducive to the microservices architectural style, where each function of an application is packaged as an independent, scalable service that runs in its own container. Services communicate through well-defined APIs and can be updated independently, increasing system resilience and adaptability.

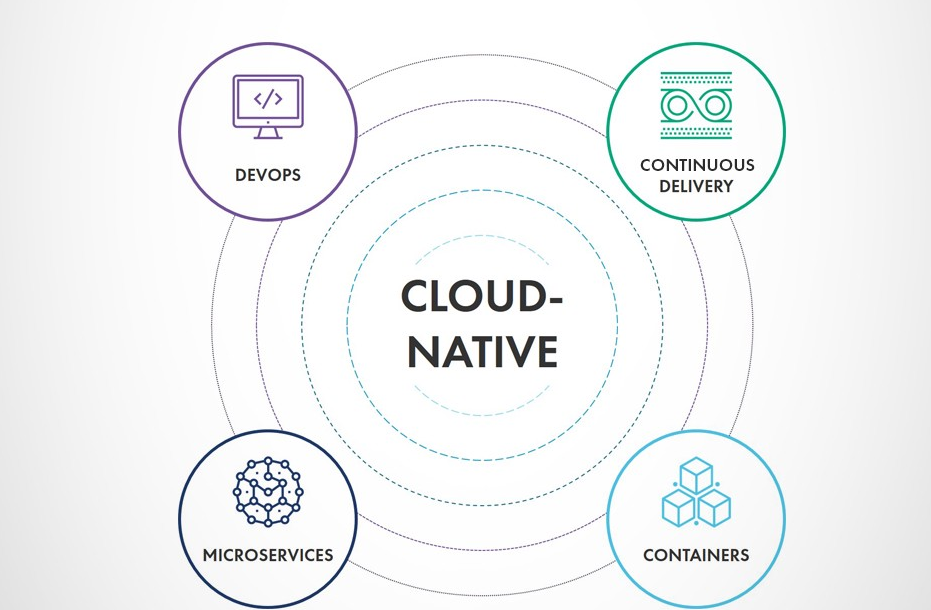

Cloud-Native Applications

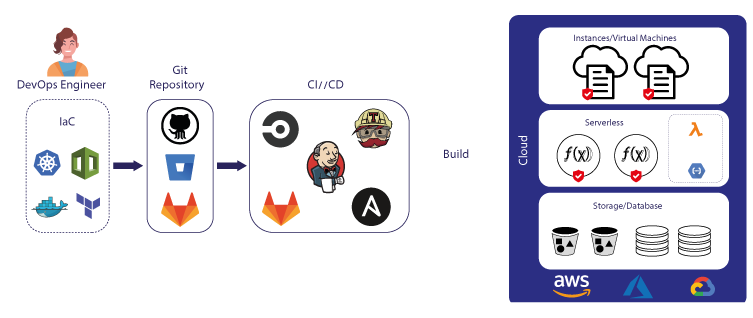

Cloud-native applications represent an innovative approach to software development and deployment, optimized for cloud computing environments. They're designed to take full advantage of cloud computing models and delivery on-demand over the internet. Following the principles of the twelve-factor app methodology, cloud-native applications are often organized around microservices, deployed in containers, and managed through agile DevOps processes. These apps are built to scale out (horizontal scaling), employing the elastic nature of the cloud to manage resources and demand.

Container Orchestration

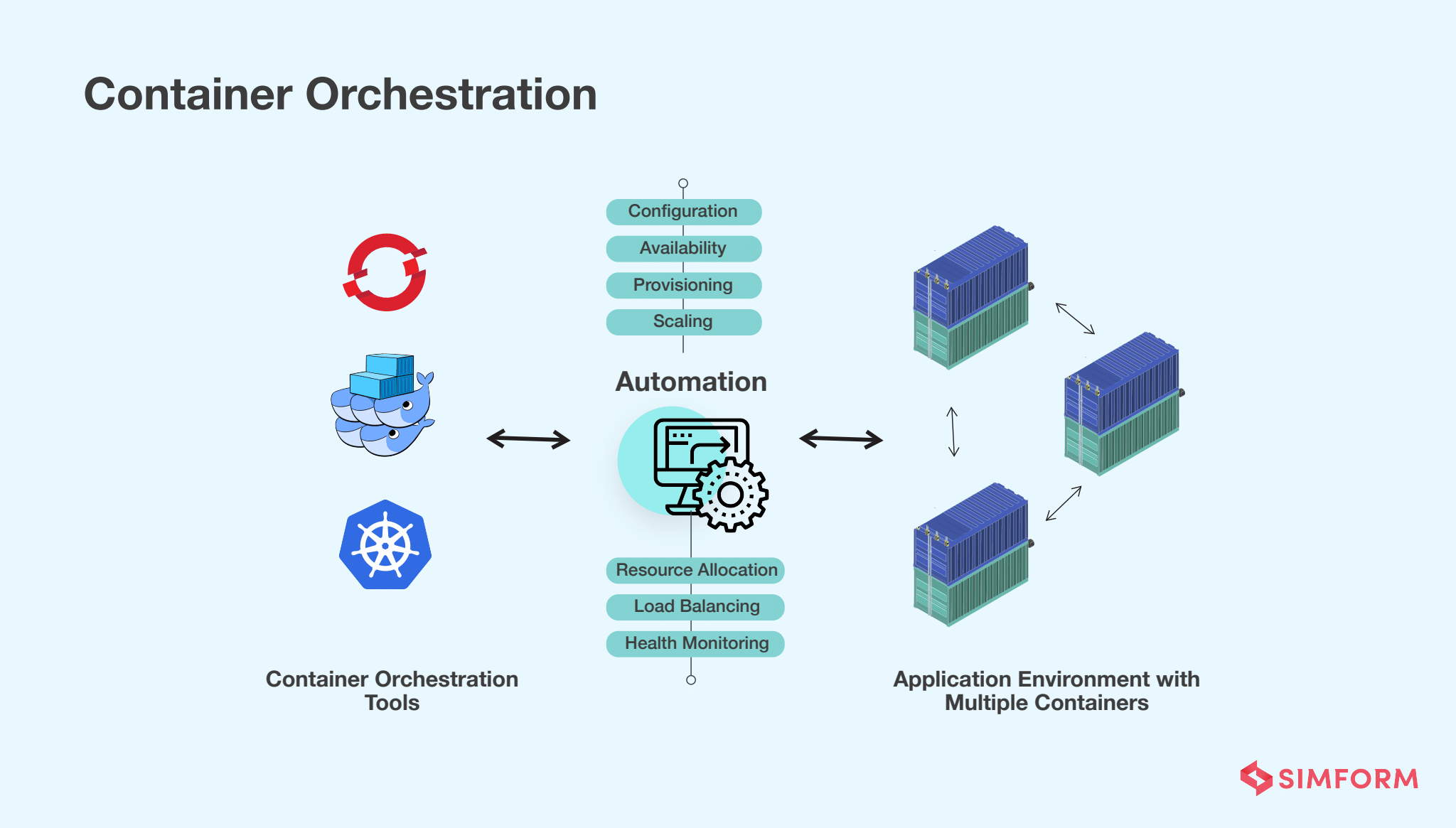

As the scale of containerized applications and microservices grow, the complexity of managing and deploying these services increases proportionately. Container orchestration emerges as a solution to this challenge, providing automated container lifecycle management. Orchestration tools handle scheduling, service discovery, load balancing, network routing, secrets management, scaling, updates, and fault tolerance across a fleet of containers. This automation dramatically simplifies the management of complex, distributed systems.

Container orchestration refers to the process of managing the lifecycles of containers, especially in large, dynamic environments. It's crucial for businesses because it automates many tasks such as deployment, scaling, networking, and availability of containers.

Container orchestration can be used in any environment where you use containers, and is particularly necessary in a multi-container environment that requires coordination and automation of many operational tasks including:

- Provisioning and Deployment: Orchestration tools can automatically manage the allocation of resources and scheduling of containers to specific hosts based on predefined policies. They can also handle the deployment process, including pulling images from a registry, deploying them as containers on hosts, and then managing their lifecycle.

- Health Monitoring: One of the key features of container orchestration is its ability to continuously monitor the health of containers and the applications running inside them. Orchestration tools can automatically replace or restart containers that fail, kill containers that don’t respond to user-defined health checks, and provide notifications regarding any changes in the overall container state.

- Service Discovery and Load Balancing: With potentially hundreds of containers on the move, finding and connecting with the containers providing a specific service becomes challenging. Container orchestration platforms often include service discovery and load balancing capabilities. They can route traffic to containerized services automatically, provide unique IP addresses for every container, and distribute network traffic to balance loads among a pool of instances of an application.

- Scaling: As application demands change, you need to scale the number of containers up or down. Container orchestration allows for automated horizontal scaling (increasing or decreasing the number of container instances) based on the CPU usage or other application-provided metrics.

- Configuration and Secret Management: Applications need a way to store and manage sensitive information such as passwords, OAuth tokens, and SSH keys. Container orchestration solutions can manage such sensitive information and provide controlled access to it.

- Networking: Containers need to communicate with each other and with external applications. Orchestration tools provide networking capabilities that allow communication between different services in the same cluster and expose services to the internet.

- Storage Management: Persistent storage is crucial for stateful applications. Container orchestration platforms can automatically mount storage systems of your choice, whether from local storage, a public cloud provider like GCP or AWS, or a network storage system like NFS, Ceph, Glusterfs, or others.

Introduction to Kubernetes

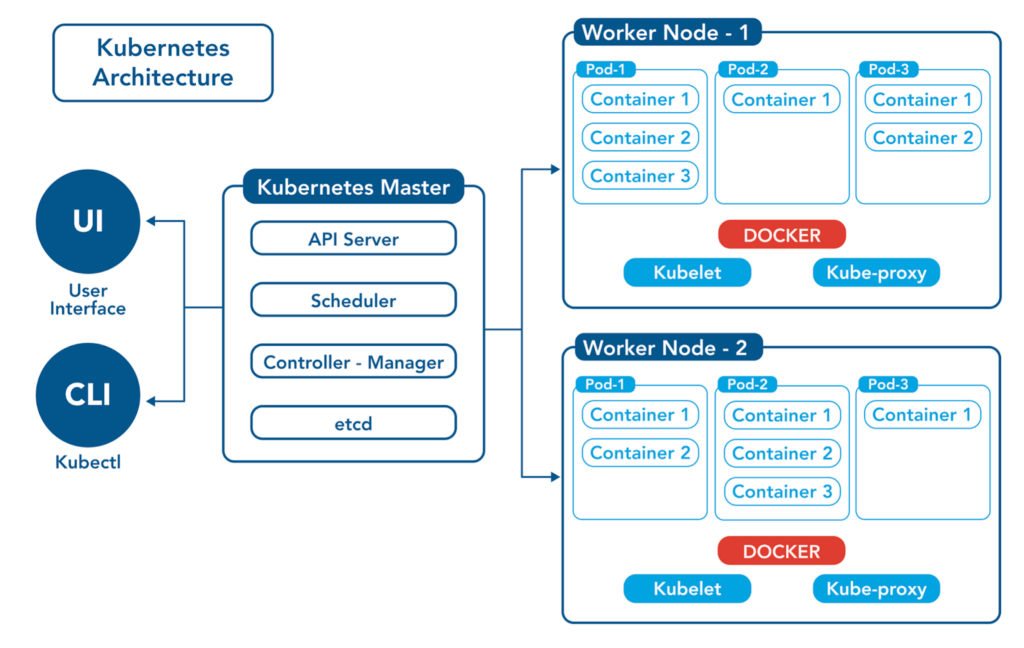

Kubernetes (often abbreviated to K8s) is a powerful, open-source system for managing containerized applications across multiple hosts. It provides a platform designed to completely manage the lifecycle of containerized applications and services using methods that provide predictability, scalability, and high availability. Kubernetes architecture is designed around a set of pluggable components that include Pods (the smallest and simplest unit), Services (defines a logical set of Pods and a policy by which to access them), Volumes (disk storage), and Namespaces (multiple virtual clusters backed by the same physical cluster).

History and Evolution of Kubernetes

Kubernetes was originally developed by Google, drawing from a decade of experience running production workloads at scale using a system called Borg. Google open-sourced the Kubernetes project in 2014. Recognizing the value of this project to the broader IT community, Google donated Kubernetes to the Cloud Native Computing Foundation (CNCF) in 2015. Over the years, Kubernetes has become the industry standard for container orchestration due to its comprehensive feature set, backed by a vibrant community of contributors and an extensive ecosystem of complementary tools.

Kubernetes, Containers, and Docker: How They Relate

Kubernetes and Docker are often mentioned in tandem, but they serve distinct roles in the world of containerized applications. Docker is a platform that allows developers to package applications and their dependencies into a standardized unit for software development, known as a container. Docker simplifies the process of managing dependencies in an isolated environment and thus reduces the "it works on my machine" problem.

On the other hand, Kubernetes is an orchestration platform that manages containers. It handles the automation, scaling, and management of containerized applications. While Kubernetes supports various container runtimes, Docker is one of the most popular. A Kubernetes Pod, the smallest and simplest unit in the Kubernetes object model, can run multiple containers within it. In this architecture, Docker is used to run the actual containers, while Kubernetes manages the Pods and ensures everything is running as expected.

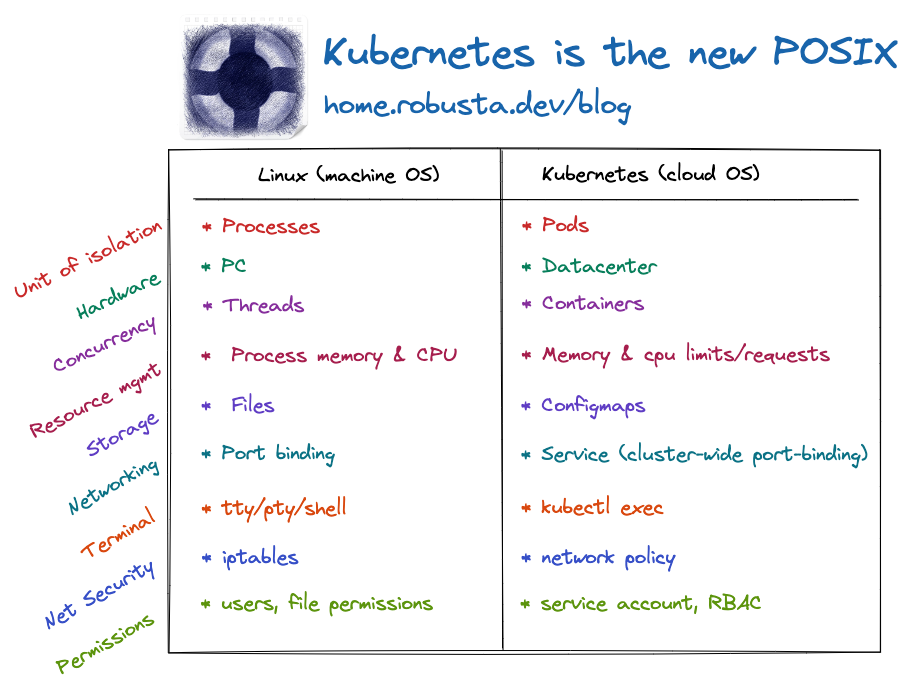

Kubernetes as the Operating System of the Cloud

Given the critical role Kubernetes plays in managing workloads in the cloud, it's often dubbed as the 'operating system of the cloud'. It's worth noting that, just like an operating system manages resources, processes, and inter-process communication on a single machine, Kubernetes manages these aspects on a cluster of machines in the cloud. It handles scheduling of applications (akin to process scheduling), manages resources (like a kernel), and handles communication between applications. It provides the underlying technology that allows users to manage services as if they're running on a single system, even though they're running on a cluster of machines in a distributed environment.

About 8grams

We are a small DevOps Consulting Firm that has a mission to empower businesses with modern DevOps practices and technologies, enabling them to achieve digital transformation, improve efficiency, and drive growth.

Ready to transform your IT Operations and Software Development processes? Let's join forces and create innovative solutions that drive your business forward.

Subscribe to our newsletter for cutting-edge DevOps practices, tips, and insights delivered straight to your inbox!