Kong: Setup The Most Popular API Gateway on Kubernetes Cluster

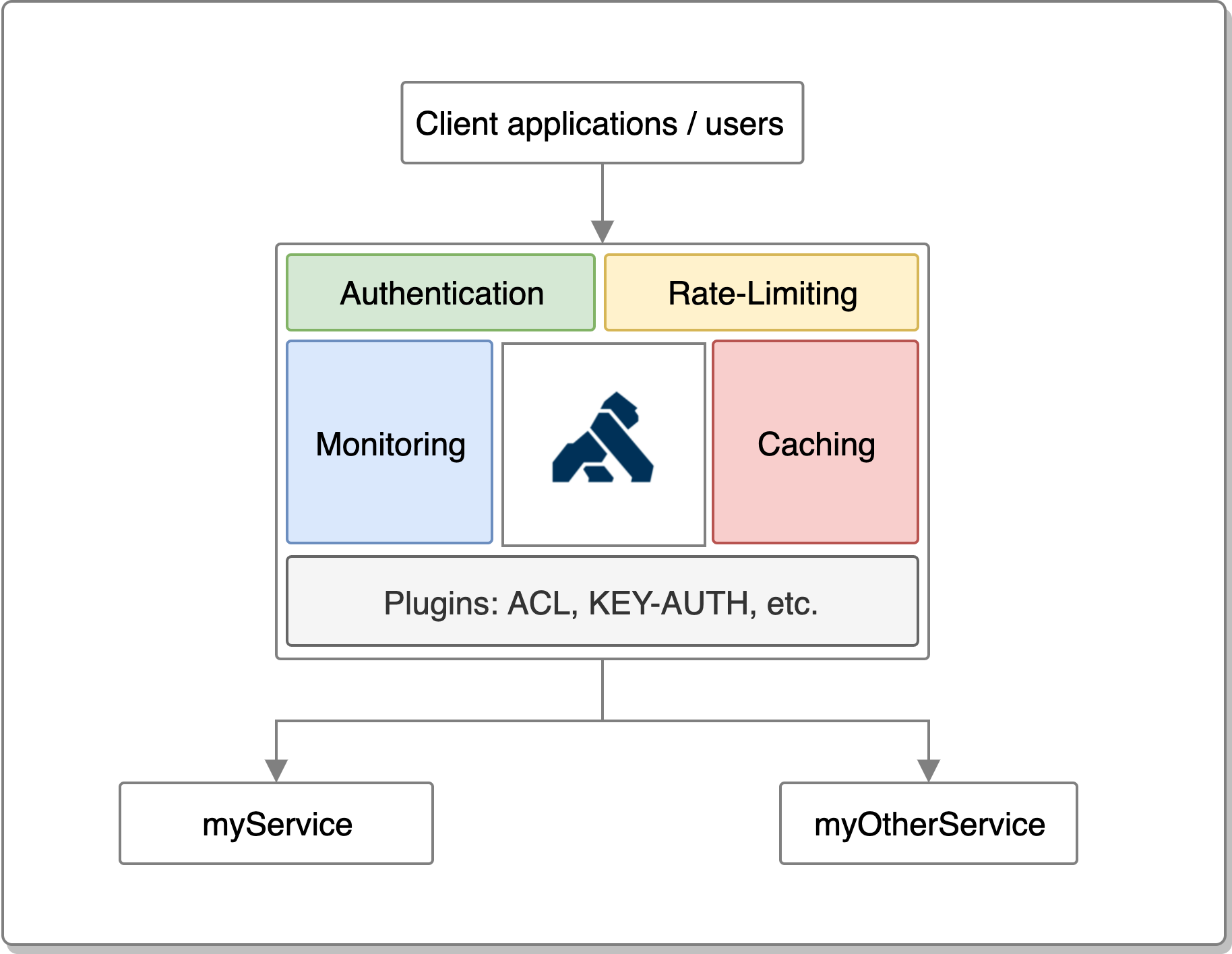

An API Gateway serves as a critical component in a microservices architecture by acting as a reverse proxy for your APIs.

Introduction

In this article, we're going to dive deep into the technical aspects of the Kong API Gateway, its purpose, key features, and how it compares to other popular solutions like Traefik and NGINX. Let's get started!

The Nuts and Bolts of API Gateway

An API Gateway serves as a critical component in a microservices architecture by acting as a reverse proxy for your APIs. It sits between clients and backend services, managing and routing API requests to the appropriate services. Here are some core functionalities of an API Gateway:

- Request routing: Directing API requests to the correct backend service based on the request path and method.

- Authentication & Authorization: Ensuring that only authorized clients can access the APIs by validating credentials, API keys, or tokens.

- Rate limiting: Controlling the number of requests a client can make within a specified time frame to prevent overloading backend services.

- Load balancing: Distributing API requests across multiple instances of a backend service to optimize resource utilization and ensure high availability.

- Logging & Monitoring: Collecting metrics, logs, and tracing information to track API performance and detect potential issues.

The Compelling Case for Using an API Gateway

Here's a closer look at some of the advantages of using an API Gateway:

- Enhanced Security: An API Gateway helps safeguard your backend services by implementing authentication, authorization, and even encryption policies, shielding your services from malicious attacks.

- Unified Management: By consolidating API management tasks, an API Gateway streamlines maintenance, updates, and monitoring, making it easier to manage and scale your services.

- Flexibility & Extensibility: API Gateways can be configured to add, modify, or remove functionality through plugins, enabling you to customize the gateway to meet your specific needs.

When building a backend system, the decision to use or not use an API Gateway can significantly impact both frontend and backend perspectives. Let's examine the differences from both perspectives:

| Perspective | Without API Gateway | With API Gateway |

|---|---|---|

| Backend | Individual services handle tasks like authentication, rate limiting, and logging | API Gateway handles common tasks, allowing services to focus on core functionalities |

| No unified entry point for API requests, making routing and load balancing more difficult | Single entry point simplifies request routing, versioning, and load balancing | |

| Each service may implement its own security measures, leading to inconsistent security policies | API Gateway enforces consistent security policies across all services | |

| Backend infrastructure may become harder to manage and scale as the number of services increases | Centralized management and monitoring make it easier to maintain and scale the backend infrastructure | |

| Frontend | Interaction with multiple API endpoints, making API calls and error handling more difficult | Interaction with a single API entry point, simplifying API calls and error handling |

| Inconsistencies in API design and security across backend services may lead to a challenging frontend development experience | API Gateway ensures consistency in API design, security, and error handling, providing a seamless frontend development experience | |

| Frontend developers may need to implement custom logic for tasks like authentication and rate limiting | API Gateway handles tasks such as authentication and rate limiting, allowing frontend developers to focus on core features |

A Deep Dive into Kong: The Open-Source API Gateway

Kong is a robust, open-source, cloud-native API Gateway built on top of the high-performance NGINX server. It offers a scalable, extensible, and easy-to-use solution for API management, backed by a thriving community.

Some of Kong's standout features include:

- Plugin Architecture: Kong's plugin-based architecture allows you to extend its functionality with custom plugins or leverage the extensive library of existing plugins for tasks like authentication, rate limiting, and logging.

- Flexible Deployment Options: Kong can be deployed in various environments, including on-premises, in the cloud, or even in hybrid setups, and supports Kubernetes, Docker, and VM installations.

- High Performance: Built on top of NGINX, Kong delivers exceptional performance, ensuring low-latency API request handling even under heavy loads.

Kong's Key Features and Killer Advantages

Here are some of Kong's most powerful features:

- Dynamic Load Balancing: Kong's built-in load balancer supports multiple load balancing algorithms, such as round-robin, consistent hashing, and least connections, for efficient request distribution.

- Health Checks & Circuit Breakers: Kong automatically monitors the health of backend services and can temporarily disable unhealthy instances to prevent cascading failures.

- gRPC Support: Kong supports gRPC, a modern, high-performance RPC framework, enabling you to manage gRPC services alongside RESTful APIs.

- Extensive API Security: Kong offers a wide range of security features, including OAuth 2.0, JWT, and ACL plugins, as well as the ability to integrate with third-party identity providers like Auth0 and Okta.

Comparing Kong with Traefik and NGINX

Let's take a closer look at how Kong stacks up against other popular API Gateway solutions like Traefik and NGINX:

| Feature | Kong | Traefik | NGINX |

|---|---|---|---|

| Reverse Proxy | Yes | Yes | Yes |

| Load Balancing | Yes | Yes | Yes |

| Health Checks | Yes | Yes | Yes (with NGINX Plus) |

| Rate Limiting | Yes | Yes | Yes (with NGINX Plus) |

| Authentication/Security | Yes | Yes | Yes (with NGINX Plus) |

| gRPC Support | Yes | Yes | Yes |

| Plugin Architecture | Yes | No | No |

| Custom Plugins | Yes | No | Limited (with Lua scripting) |

| Kubernetes Integration | Yes | Yes | Yes |

| Dashboard/UI | Kong Enterprise Edition only | Yes (Traefik Enterprise) | NGINX Plus only |

Here's a brief comparison of these three API Gateways:

- Kong offers a powerful and extensible API Gateway with a rich ecosystem of plugins and a focus on flexibility. Its open-source nature and support for custom plugins make it an attractive choice for organizations looking to tailor their API management solution.

- Traefik is a more lightweight and simple API Gateway solution, primarily geared towards cloud-native environments and container-based deployments. It offers many core API Gateway features but lacks Kong's extensive plugin ecosystem.

- NGINX is a well-established, high-performance web server and reverse proxy server that can also be used as an API Gateway. While NGINX offers excellent performance and reliability, many of its advanced API management features, such as rate limiting and authentication, require the commercial NGINX Plus version. Additionally, it lacks the plugin-driven architecture of Kong.

Konga: Dashboard for Kong

Konga is an open-source, community-driven management dashboard for the Kong API Gateway. It provides a user-friendly graphical interface to manage and monitor your Kong infrastructure. Konga allows you to configure and manage your Kong services, routes, plugins, and consumers, all through an intuitive web-based interface.

Kong on Kubernetes

Kong fully supports Kubernetes. In fact, Kong has a Kubernetes-native solution called the Kong Ingress Controller. The Kong Ingress Controller acts as an Ingress controller for Kubernetes, allowing you to manage Kong's configuration using Kubernetes resources like Ingress, Services, and ConfigMaps. It simplifies the process of integrating Kong API Gateway into your Kubernetes clusters.

Key Features of the Kong Ingress Controller:

- Native Kubernetes Integration: The Kong Ingress Controller is built specifically for Kubernetes and integrates seamlessly with Kubernetes resources.

- Dynamic Configuration: The Kong Ingress Controller watches the Kubernetes API for changes to relevant resources (such as Ingress, Services, and ConfigMaps) and updates Kong's configuration in real-time, without requiring manual intervention or restarts.

- Declarative Configuration: You can manage your Kong API Gateway configuration using familiar Kubernetes resources and manifest files, making it easier to version control and automate your infrastructure.

- Support for Custom Resources: The Kong Ingress Controller introduces custom Kubernetes resources like KongConsumer and KongPlugin, enabling you to manage Kong-specific configurations using Kubernetes-native resources.

- Full Kong Functionality: By using Kong Ingress Controller, you can leverage all the features and plugins available in Kong, including rate limiting, authentication, observability, and more.

An essential aspect to keep in mind is that Kong on Kubernetes operates in a db-less mode. There's no need to install a database (such as PostgreSQL) to store Kong's configuration. Instead, all configurations are managed declaratively through Kubernetes resources and manifest files.

Installing Kong on Kubernetes Cluster

Installing Kong on Kubernetes Cluster is pretty straighforward. There are few steps we need to do in order to setup Kong properly, including Install Kong Deployment, Setup SSL Issuer for Kong, and lastly, Setup Ingress Controller using Kong.

Install Kong Deployment

Kong indeed provides a Deployment Manifest that you can use to set it up on a Kubernetes Cluster. These manifests contain the necessary Kubernetes resources, such as Deployments, Services, and ConfigMaps, to deploy and configure Kong on your Kubernetes cluster.

Download Manifest

~$ wget https://raw.githubusercontent.com/Kong/kubernetes-ingress-controller/v2.9.3/deploy/single/all-in-one-dbless.yamlInstall to Kubernetes

~$ kubectl apply -f all-in-one-dbless.yamlInstall SSL Issuer

We already cover it, but for Nginx Ingress Controller. You can read its full article from the link below.

We just need to give a little tweak by changing ingress.class to kong.

Install it

~$ kubectl apply -f letsencrypt-issuer-kong.yamlSetup Ingress Controller

For the simplicity, let's say we have 3 services: ServiceA, ServiceB, ServiceC. We will set a Ingress hostname api.example.com and provide 3 path to redirect request to each services we have.

https://api.example.com/service-afor ServiceAhttps://api.example.com/service-afor ServiceBhttps://api.example.com/service-afor ServiceC

Boom! Now we have Kong API Gateway, the most populer API Gateway, installed on Kubernetes Cluster.

About 8grams

We are a small DevOps Consulting Firm that has a mission to empower businesses with modern DevOps practices and technologies, enabling them to achieve digital transformation, improve efficiency, and drive growth.

Ready to transform your IT Operations and Software Development processes? Let's join forces and create innovative solutions that drive your business forward.

Subscribe to our newsletter for cutting-edge DevOps practices, tips, and insights delivered straight to your inbox!